Calling Databricks Notebooks from Data Factory Pipelines#

You may have noticed a new activity in your data factory pipelines, in Fabric.

That’s right, you can now call Databricks notebooks from Fabric. It’s a small step but one in the right direction and starts to open the doors a bit wider for hybrid architectures where you can combine the strengths of both platforms.

- Data Transformation and Cleansing in Databricks: Leverage Databricks for heavy-duty data transformation tasks. Whether it’s wrangling messy data, applying complex transformations, or running machine learning models..

- Orchestration within Fabric: Keep the orchestration layer within Fabric. This means managing your pipeline schedules, dependencies, and overall workflow orchestration in one place, and if you are storing data in the OneLake, you’re simplifying connectivity too.

You may be wondering why I’d be so excited about such a small feature/change? This is going to open the door for organisations that already have an established Databricks Lakehouse platform for starters…

- Leveraging Existing Databricks Platforms: If you’re already invested in Databricks, you don’t need to rebuild your entire platform. Instead, you can adopt and integrate with Fabric to make the most of the features important to you. This is especially valuable for organizations with established Databricks workflows.

- Direct Lake Connectivity to Power BI: It’s now easier to take advantage of Fabric’s deeper integration with Power BI through DirectLake.

- Use the best tool for the job: Databricks is “currently” working with Delta Lake 3.0 and a higher version of spark. Where those new features, and performance improvements are important, you can take advantage of those but still make use of Fabric, without having to explicitly choose one or the other.

Dig into the Activity#

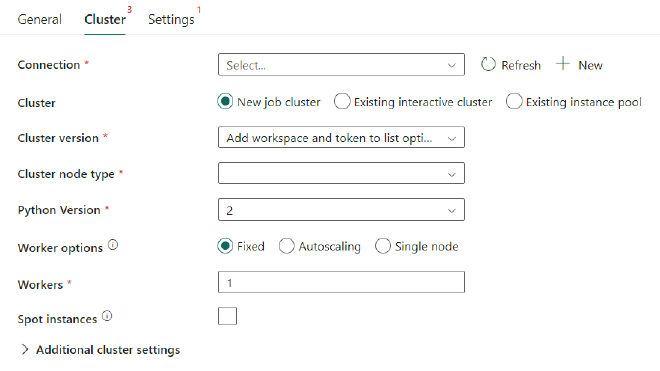

Looking at the activity, we’ve got the options to define a new or existing cluster which is great for looping round entities in a metadata driven framework.

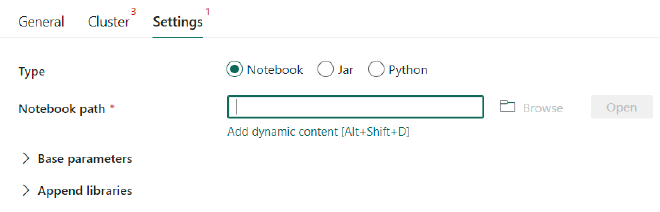

The ability to add dynamic content to the notebook path is also very welcome for the same reason. This can be parameterised and fed in through metadata 😍

What’s Next? Secure Network Connectivity#

While the current integration is exciting, keep an eye out for secure network connectivity enhancements. This is one of the biggest blockers to adoption for larger Enterprise customers I’m seeing at the moment who just can’t integrate secure resources with Fabric yet.