Amid the plethora of announcements coming out of the DATA + AI Summit a few weeks back, there was a distinct message from Databricks on the importance of data sharing.

Matei Zaharia during the Data + AI Summit 2022 Keynote

During the keynote Matei Zaharia referenced a Gartner report quoting that they

“…expect organisations who share data and are part of an ecosystem to see 3x better economic performance.”.

Gartner

That’s a significant projection but not all that unexpected.

Having worked with many organisations across different industries and sectors, the sharing of data with partners and vendors is always a pain point and one that all too often results in both parties not quite getting what they want or need. This isn’t restricted to my experience however which is why Databricks announced Delta Sharing back at DATA + AI Summit 2021.

Coming to this year’s conference, Delta Sharing has been established as the foundation for many new features with the announcement Databricks Marketplace and Cleanrooms for example, both built upon the Delta Sharing protocol. We’ll explore Cleanrooms below and I’ll look at the Databricks Marketplace in it’s own post.

Delta Sharing#

In this year’s keynote Matei announced Delta Sharing is now Generally Available, clearing a path for customers to use it in production scenarios and further driving adoption of the open source protocol.

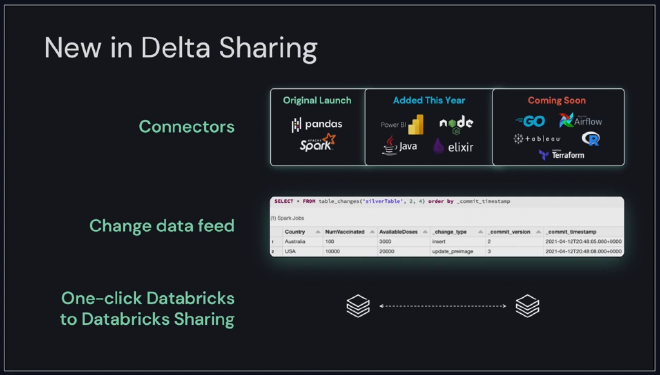

They’ve also expanded the capabilities of Delta Sharing with more connectors in BI tools such as Power BI, with Tableau, Terraform, Apache Airflow, and more coming soon.

But what is Delta Sharing?#

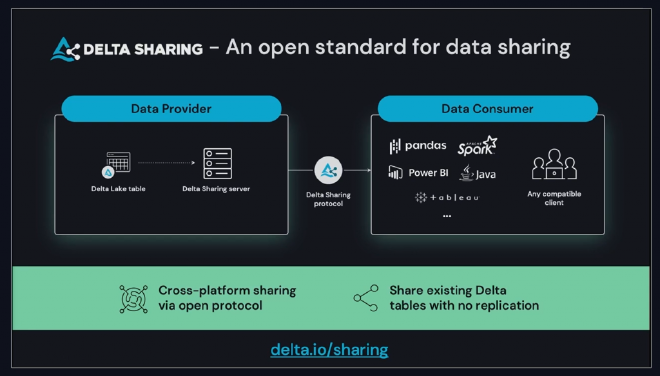

Delta Sharing is an open source protocol for sharing data.

Building upon the Delta file format (also open source) that makes up the backbone of the Data Lakehouse architecture pattern, Delta Sharing exposes the data through a REST API that any platform (that is able to process the underlying parquet files) can interact with.

A key benefit of Delta Sharing is that you aren’t locked into a specific vendor for the underlying data or the sharing capabilities. You could have Azure Synapse, Databricks, or any other platform on top of your data lake and you can use Delta Sharing. By sharing data directly from Delta tables there is no need to copy or move data elsewhere.

Although Matei mentioned that petabytes of data have already been shared using Delta Sharing, it’s only now, with Databricks having made Delta fully open-source with Delta Lake 2.0 (that’s every feature, even the Databricks-only ones), that I expect we’ll start to see much wider adoption of Delta Sharing as vendors and platform providers embrace the Data Lakehouse architecture.

All of those organisations I know that struggle to get reliable, timely, or even non-aggregated data from vendors and partners still have a way to go before the adoption of Delta as a file format reaches both parties widely enough to make this as easy as it looks.

Databricks Cleanrooms#

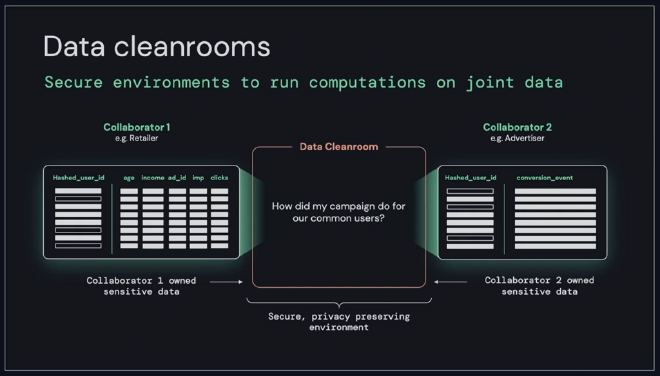

As previously mentioned, Data Cleanrooms is a new feature built upon the Delta Sharing protocol. It’s not a new concept however with many vendors or cloud providers providing their own solutions to share datasets between organisations. Facebook provides data clean rooms to its advertisers to share aggregated customer data whilst still controlling how it is used. Banks share data for fraud prevention but naturally competing for customers, this has to be tightly controlled and managed. It’s a well established concept in many types of data sharing relationships.

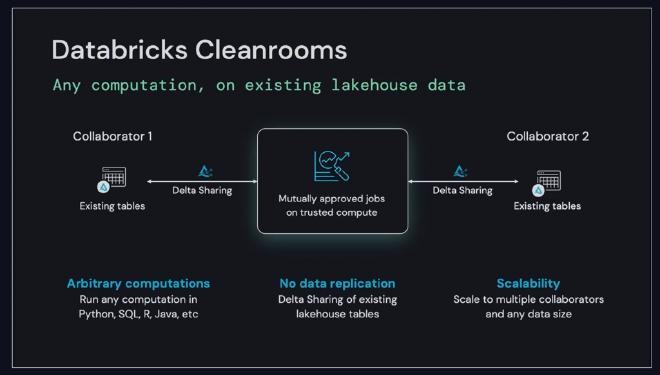

What Databricks brings to the table is the underlying Delta Sharing protocol. You aren’t locked into using SQL for data analysis, you have the same flexibility of the Data Lakehouse - Python, R, Java, SQL etc. This opens up many more possibilities for machine learning and advanced analytics on the shared dataset(s). Working with the Delta file format means you don’t need to move data either. Both parties work from their own data lakehouse platform. Queries and jobs are then mutually approved before they can be executed.

Databricks Cleanrooms isn’t in public or private preview so I’ve not had a hands-on play with it yet.

I think it will be interesting to see how this and Delta Sharing work with a fully secure implementation of Databricks, behind VNets and private endpoints, which is becoming the standard approach for financial organisations.